Social Interaction

Video-Based Studies of Human Sociality

Halting the Decay of Talk:

How Atypical Interactants Adapt their Virtual Worlds1

Nils Oliver Klowait1 & Maria Erofeeva2

1Paderborn University

2Université Libre de Bruxelles & Ulster University

Abstract

We investigate how people with atypical bodily capabilities interact within virtual reality (VR) and the way they overcome interactional challenges in these emerging social environments. Based on a videographic multimodal single case analysis, we demonstrate how non-speaking VR participants furnish their bodies, at-hand instruments, and their interactive environment for their practical purposes. Our findings are subsequently related to renewed discussions of the relationship between agency and environment, and the co-constructed nature of situated action. We thus aim to contribute to the growing vocabulary of atypical interaction analysis and the broader context of ethnomethodological conceptualizations of unorthodox and fractured interactional ecologies.

Keywords: technologically mediated communication, atypical interaction, virtual reality, multimodal conversation analysis

1. Introduction

Interaction analysis is increasingly facing a fractured ecology (Luff et al., 2003) where interactants are "within sight and sound" (Goffman, 1981, p. 145) of one another yet not necessarily co-present in the same physical space. Especially with the rise of working from home, the embedding of telemediated participation in everyday life has accelerated substantially. This development coincides with the refinement of multimodal conversation-analytic toolsets.

The growing attention to embodied action and the multimodality of interaction (C. Goodwin, 2000; Mondada, 2016; Nevile, 2015) brings with it a renewed interest in the role the material environment plays in social interaction (Nevile et al., 2014). In ethnomethodology and conversation analysis (EMCA), objects have been studied as facilitators of joint activities (Brassac et al., 2008; Fox & Heinemann, 2015; Lindström et al., 2017), integral parts in the construction of basic actions (Day & Wagner, 2014; Mondada, 2019), and the means to maintain social relations (Licoppe et al., 2017). While agency plays such constitutive roles, its full scope is typically ascribed to humans; objects are instead treated as resources or accomplishments of human action. At the same time, we have to deal with phenomena that disrupt members' current projects of action, such as latency in videocalls (Seuren et al., 2021). In this case, human agency is seen to be constrained by the material (technological) environment.2

Technologically mediated communication is congenial to atypical interaction, as both agents have limited resources. For example, during a telephone conversation, we cannot see the interlocutor and read their non-verbal cues. For this reason, mediated communication has long been viewed as a reduced version of face-to-face interaction (Arminen et al., 2016). In a similar vein, persons with atypical bodily capabilities (ABCs) cannot use the same embodied resources as able-bodied normates (Garland-Thomson, 1997). For instance, when people with speech or hearing impairments (aphasia, dysarthria, or partial or complete hearing loss) are forced to use speech, they often find themselves in a disadvantageous position. In his study of aphasia, Barnes (2014) notes that often repair does not occur after a stretch of unintelligible talk. In other words, participants' agency in these two contexts is constrained, either by the technological or common interactional infrastructure such as turn-taking or repair.

This conceptualization of agency is based on the premise that agency is an inherently human attribute, but in recent years we have seen various attempts to ascribe agency to elements of the material environment (Caronia & Mortari, 2015) and to 'distribute' the speaker to show that agency is a joint, co-constructed, practical accomplishment shaped by a local ecology of action. In other words, in moving away from a 'deficiency perspective' (Arminen et al., 2016), the field of EMCA has taken steps to reconfigure the relationship between agent and environment (Mondada, 2013). Atypical interaction analysis (see Wilkinson et al., 2020) is positioned at the forefront of these developments, since it underlines the broad range of at-hand resources that may be available to differently-abled participants and moves away from seeing 'disability' as an a priori upper limit for interactional participation. Rather, diverse resources can be deployed by diverse actors to accomplish diverse projects: an electronic speech aid can be employed as a device for collaborative storytelling (Auer & Hörmeyer, 2017), touch can be used to establish a haptic participation framework (Cekaite & Mondada, 2021; M. H. Goodwin, 2017; M. H. Goodwin & Cekaite, 2019; Raudaskoski, 2020), and even a limited vocabulary can be used to co-operatively accomplish complex actions (C. Goodwin, 1995).

In this paper, we draw on Goodwin's concept of 'public substrates' to conceptualize agency in social interaction. A public substrate is prior action which builds up a surrounding environment (C. Goodwin, 2018). When we reuse the words of our interlocutors, we draw on resources (in Goodwin's words, indexically incorporate them in our subsequent action) which were made publicly available by others. This idea helps us see agency as a distributed achievement of many actors in local environments that furnish the actors' capabilities. Thus, we can account for both technologically mediated and atypical interaction without an inapposite deficiency connotation.

2. Methodology

The following case study is based on videographic material collected in the context of a project aiming to research the peculiarities of multimodal interaction in virtual reality (VR), where at least some of the participants used VR helmets and body sensors to transmit their embodied action into a telecopresent (Zhao, 2005) environment, with a humanoid avatar acting as a conduit for embodied action.

The research is placed within the tradition of multimodal conversation analysis (CA), which "uses video recordings as a way of documenting situated activities and social interactions as they happen within their ordinary settings, in the least intrusive way possible and without orchestrating the activities of the participants" (Merlino et al., 2022, 4). Though videography was primarily employed to study 'real' interaction, recent scholarship suggests that it can be applied to interaction analysis in VR (Auer & Stukenbrock, 2022; see also Brüning et al., 2012; Haddington & Oittinen, 2022; Klowait, 2023). Moreover, the ethnomethodological foundation of multimodal CA places the analytic focus on the methods deployed by participants for practical purposes, without an explicit commitment to a particular physical reality or bodily characteristics.

3. The Data

Over the course of three months, our team used PC-based recording equipment to collect video-ethnographic data in VRChat, a major cross-platform multimodal VR chat platform, where people from all over the world can interact through oftentimes community-made avatars and social environments. We chose VRChat because it gives the participants broad powers to furnish the environment to their needs.

Our case study highlights a specific community, Helping Hands, "a community to encourage anyone who wants to learn sign to be able to interact with various Deaf communities and cultures inside VRChat, while also gaining the skills applicable to the real world" (Helping Hands Mission Statement, n.d.).

This case is particularly interesting for several reasons. Firstly, when encountering VR, ABCs face a set of unique challenges and opportunities. On the one hand, most mainstream VR systems presuppose a normate user, with a headset that tracks unencumbered head motion and transmits audio-visual input, and two controllers to be yielded by normate hands. As such, a normate-centric design language and interaction model can be at odds with the interactional capabilities of ABCs: for example, it is rather rare for a VR experience to provide legible subtitles, making it nigh on impossible to comfortably experience a narrated story within VR as a Deaf person. Furthermore, some ABCs might struggle with the granular hand motion inscribed in the control paradigm of certain experiences, while the headsets themselves may be difficult to wear for people with neck problems.

On the other hand, the malleability of VR spaces also make it possible to find novel means of adapting the environment to one's needs. For instance, it is possible to add live subtitles to people's talk in VR, thereby making new forms of communication with variously-abled persons possible. Since the VR space is still relatively competitive–beyond Facebook's/Meta's Oculus, there are a range of competing sets of VR systems, each with system-specific input paradigms–larger spaces tend to be generally open to different input systems, which creates, at least theoretically, an 'in' for adaptive input paradigms and adaptations of interactional conventions to them (for an overview, see Klowait, 2023).

Our case highlights one environmental adaptation to the needs of Deaf persons: virtual pens.

4. Writing in the Air

VRChat's spaces do not have to follow the constraints of the physical world. A prominent example of such a constraint relaxation is the ability of participants to use special pens that can write in the air–'airpens'. Certain rooms–originally those dedicated to lectures or pitch meetings–have airpens available that can be employed by all participants. With the airpen in hand, at the press of a button a line appears wherever the airpen is placed in the room. Moving the airpen through space, in any direction, leaves a colorful trace that is visible to other participants in the room. Unless a global 'reset' button is pressed, or unless specific traces are wiped away with an eraser, the streaks persist over time and can be viewed from multiple angles by all sighted participants.

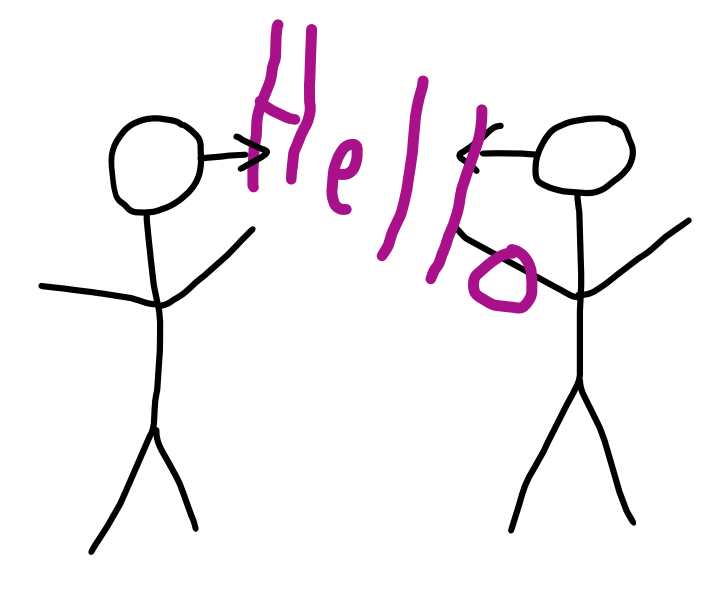

When used as a communicative device, the environmentally-coupled nature of the airpen interfaces with embodied formations between participants: if two participants are facing each other head-on, and the airpen is used to write something in the air between the participants, then the text being written will appear mirrored to one of the participants.

Figure 1. Asymmetry of ecological text

This circumstance has notable consequences for the facing formations (Kendon, 2009) commonly deployed when communicating with airpens. For instance, participants may choose to write in mirrored script from the outset; alternatively, they may allow for a repositioning of the interlocutor after writing a lengthy message; finally, the message may be written either at a 90-degree angle, allowing for a half-facing formation (Erofeeva & Klowait, 2021), or may outright be written with both participants facing the same direction.

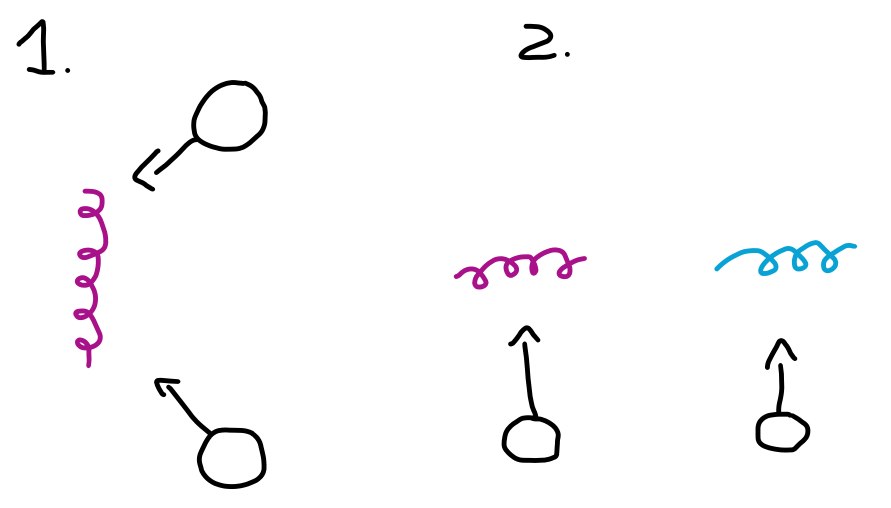

Figure 2. Typical facing formations of airpen use

Another interactionally-relevant aspect of airpens is their role as a mutually accountable locus of gaze. Since the avatars in VRChat may not necessarily be of the same height–and may not necessarily have visible indicators of gaze direction–the position of the airpen, as it is drawing a new line in the air, may be the only certain resource for establishing mutual gaze.

While airpens may only be curious playthings for normates, they are explicitly highlighted as enabling the participation of Deaf persons within an otherwise normate-centric communicative infrastructure. This section will introduce the role of the airpen within Helping Hands and demonstrate how it is used as a resource for scaffolding Deaf-person-inclusive interactions.

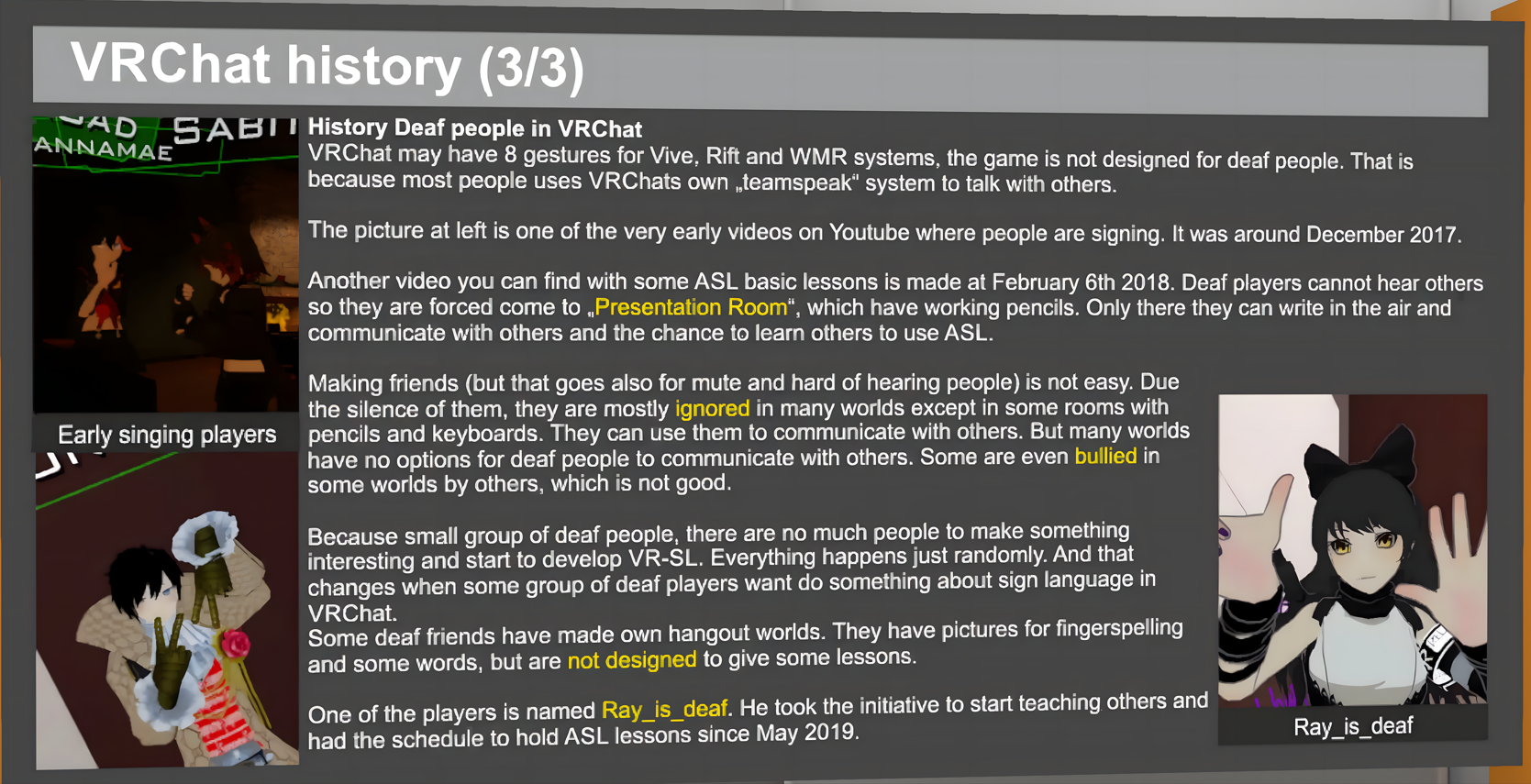

In a virtual room specifically dedicated to the history of VRChat, in relation to Helping Hands, the following is stated about airpens:

Figure 3. Sign Language Museum in MrDummy_NL's Sign&Fun world

In other words, airpens function not only as a means to communicate with both Deaf persons and normates but are described as enablers of Deaf-person-specific sign-based languages by the participants themselves.

5. Building Action with Airpens

Communication by participants with different abilities becomes possible if they can attend to at least some common media. Goodwin has persuasively demonstrated how a participant who cannot speak can be an active speaker by reusing the rich language structures of others (C. Goodwin, 1995).

In our study, the use of symbolic language is almost impossible, since one of the participants cannot hear and the other cannot understand sign language. Airpens come to the rescue, as they substitute the ability to talk. The properties of the inscriptions created are nevertheless drastically different from speech–they are produced much more slowly; at the same time, they become durable as the written text stays floating in the air. We will now examine how differently-abled participants attend to this medium to build an understanding in interaction.

We join the sequence when Bill (the Deaf person) conducts a tour around a virtual museum of the history of the Helping Hands community to Ann (a person who is not proficient in sign language). Bill is an experienced user of VRChat and also operates a full-body tracking avatar, with all his physical movements being transmitted into virtual reality. Ann accesses VRChat from a desktop application which means that she can move, look, and write in the air with only limited means–a mouse and a keyboard. She has not used airpens before; airpens are considerably harder to use with a mouse than with a virtual hand, since a mouse only affords three degrees of freedom (rotation) compared to a VR controller (rotation + translation).

The sequence starts when Bill asks Ann 'did you come here before' by writing this question in the air. It takes more than 17 seconds to complete the first pair part. Due to the spatial characteristics of inscriptions, participants are encouraged to position their bodies in such a way that the inscribed words are mutually available to the participants as a necessary precondition to their interaction (Haddington & Oittinen, 2022). To achieve this, they perform a side-by-side F-formation (Kendon, 2009). However, accomplishing this formation is nontrivial. Since the words take up space and are partially covered by the writer's back, Ann needs to reposition herself twice to be able to read what is being written in the air (lines 1, 4). As soon as the question becomes projectable (when 'bef(ore)' appears), Ann turns away and walks to the other side of the room where the virtual pens are placed, proceeds to equip herself with one of the available pens (line 12), and returns to the initial writing location (line 13). Airpens afford writing in any space in the room, yet Ann walks back to the same spot where the first pair part is floating in the air to produce the second pair part next to it. This trajectory is not the only available option, since Bill can be seen to have followed her (line 13) and therefore was in a formation to perceive her answer earlier or 'locationally sooner'. The choice to return to the initial drawing location indicates that Ann treats the inscription in the air as a material sequential infrastructure, a kind of a writing board, where it is relevant to place an answer to a question. The conditional relevance of the position of the second pair part here is not only temporal but also spatial, co-occupying a material-interactional ecology with the participants. Although the words in the air are not material in a traditional sense, they are treated as such.

Transcript 1. Co-operative airpen 'no' I 3

Open in a separate windowAfter Ann returns, she struggles to produce an answer: the position of the pen in her hand is unfortunate as she cannot easily see what she is writing–this has to do with the way the pen was initially grasped: like a broom handle rather than a writing implement. Ann manages to create two symbols, (line 14, 19) constantly checking on the result of her actions (16–17, 20), from the shape of which it is possible to infer that the answer is 'no' since the first pair part was a polar question. Nonetheless, this answer is not treated as sufficient by Bill, as he does not acknowledge it, and instead initiates an instructional sequence concerning the use of airpens.

Bill starts rotating towards the letters written by Ann (Fig. 1), whereupon she focuses on him and projects that the activity he is initiating concerns writing in the air and shifts her gaze to the 'writing space' even before he stops moving. Bill moves towards the pen Ann is holding in her hand, and she releases it, leaving it floating in the air. Then she looks at Bill, presumably to understand what he wants her to do, and adjusts her field of view by stepping back to see both Bill and the pen. As Ann disattends the 'writing space' and starts moving, Bill suspends his current project of action–readjusting the pen to help Ann write her answer properly. They reach a F-formation (Fig. 2) about halfway through, but no other action is initiated before Ann's trajectory is completed. When she stops, both participants carry on with their respective projects of action: Ann makes a sharp movement of the head back and forth, similar to shaking the head, while Bill reaches towards the pen. The movement of Ann's head may be simple motor clumsiness, such as a misclick of the mouse, but there are grounds for believing that she is attempting to perform a 'no' answer with this head movement: she still may be uncertain regarding the type of activity they are participating in, at the same time understanding that her previous 'answer' was not treated as sufficient. Bill does not react in any way to this head shake, as he is performing a 'grab' action. Ann attends to his movements and looks at the pen while Bill is repositioning it (Fig. 3). Since Ann does not proceed to take the pen, Bill shifts his gaze at her and points at the pen with his palm (Fig. 4), inviting her to take it. At this point Ann seems to understand what is going on and takes the pen.

Transcript 2. Co-operative airpen 'no' II 4

Open in a separate windowAfter she takes the pen, Ann starts writing 'no' in the air, which takes her approximately 8 seconds (not shown in the transcript). She then turns to Bill (Fig. 6) to check with him if he accepts her answer this time and shakes her head once again, with a level trajectory that is consistent with communicative intent. Thus, she repeats her written answer with the head movement, possibly resorting to a more familiar modality. Interestingly, as she uses a desktop application with the mouse as a controller, she cannot perform her actions simultaneously, therefore she writes and then shakes her head. This movement seems redundant as the answer is now written clearly but, as Goodwin (2018) has shown, semiotic modalities do not simply mirror each other, but rather help participants build action in a particular embodied configuration. Bill is looking at the 'writing board' when Ann is writing, and by looking at him and shaking her head, Ann changes the participation framework and gets Bill's attention (Fig. 7). Although the answer is given, the sequence cannot be treated as recognizably complete without a kind of 'a sequence closing third', as Bill did not acknowledge Ann's two previous attempts to answer. After 1.8 seconds of no uptake from Bill, Ann releases the pen (Fig. 8) and glances briefly towards the writing space and back to Bill, as if seeking an acknowledgment of her answer. She does not appear to receive a direct acknowledgment; however, after her movement, Bill visibly initiates a new sequence by performing an 'inviting' gesture (Fig. 9) and moving away. Thus, by moving to a next sequence it is communicated that the previous sequence is closed.

Transcript 3. Co-operative airpen 'no' III

Open in a separate windowAs can be seen from this example, participants treat inscriptions created with airpens as 'material', whose spatial properties structure how the sequence is organized. In Mondada's words (2013), the spatiality of participation is both "action-shaping and action-shaped" (p. 250). The second observation from our data is that Ann, who has no disabilities in an ordinary sense, when placed in a virtual body with only one controller for all her 'modalities' and also with a lack of experience in this space, turns out to be more challenged in terms of performing a simple answer to a question. This points to the situated nature of agency, which is rooted in a local ecology of a virtual space, and its specific resources for action. What is more, Ann would not have been able to answer if Bill had not helped her. The participants constructed the answer jointly by using airpens, as well as their bodies and gaze, to coordinate action. Thus, the agency we observe in this case is not only situated but also distributed across participants.

6. Conclusion

In what can be seen from our case study, airpens are used to substitute for a spoken modality; however, due to the properties of the inscriptions, the newly created flow of 'speech' becomes much more durable. Unlike "the fleeting, evanescent decay of speech, which disappears as material substance as soon as it is spoken" (C. Goodwin, 2018, p. 171), it stays in the air and creates a focal point for the participants' attention, with broadened possibilities for the indexical incorporation of what was previously written. Due to their durability, these structures become public substrates for subsequent action. Thus, although written words are virtual and can be walked through by participants, they are attended by the participants as material in a very real sense–they create an imaginary writing board which gathers communicators around it. Yet, this newly created ‘object' is only relevant if it is re-used in the subsequent courses of action.

This ecological approach helps to account for non-human agency, neither resorting to treating objects as mere facilitators nor as adversaries. As our analysis has demonstrated, for the experienced Deaf participant airpens scaffold the ability to communicate but are recalcitrant to 'normates',5 who face this new infrastructure for agency as newcomers. Thus, airpens are neither resources nor constraints when seen in separation from their use context. Rather, they comprise certain possibilities for action which, when interlaced with another set of action possibilities provided by an avatar, create an ability to act. Agency is thus not only situated, but also distributed between different elements of the surrounding environment, including participant bodies.

In sum, this case shows how members build action by combining unlike materials with complementary properties such as written inscriptions, movement through space, and gesture. The role of the material environment lies within the possibilities it furnishes for action.

Acknowledgments

Research for this article was funded by the collaborative research center "Constructing Explainability" (DFG TRR 318/1 2021 – 438445824) at Paderborn University and Bielefeld University.

References

Arminen, I., Licoppe, C., & Spagnolli, A. (2016). Respecifying Mediated Interaction.Research on Language and Social Interaction, 49(4), 290–309. https://doi.org/10.1080/08351813.2016.1234614

Auer, P., & Hörmeyer, I. (2017). Achieving Intersubjectivity in Augmentative and Alternative Communication (AAC): Intercorporeal, Embodied, and Disembodied Practices. In C. Meyer, J. Streeck, & J. S. Jordan (Eds.), Foundations of human interaction. Intercorporeality: Emerging socialities in interaction (pp. 323–360). Oxford University Press.

Auer, P., & Stukenbrock, A. (2022). Deictic reference in space. In A. H. Jucker & H. Hausendorf (Eds.), Pragmatics of Space (pp. 23–62). De Gruyter. https://doi.org/10.1515/9783110693713-002

Barnes, S. (2014). Managing Intersubjectivity in Aphasia. Research on Language and Social Interaction, 47(2), 130–150. https://doi.org/10.1080/08351813.2014.900216

Brassac, C., Fixmer, P., Mondada, L., & Vinck, D. (2008). Interweaving Objects, Gestures, and Talk in Context. Mind, Culture, and Activity, 15(3), 208–233. https://doi.org/10.1080/10749030802186686

Brüning, B., Schnier, C., Pitsch, K., & Wachsmuth, S. (2012). Integrating PAMOCAT in the research cycle. In L.-P. Morency, D. Bohus, H. Aghajan, J. Cassell, A. Nijholt, & J. Epps (Eds.), Proceedings of the 14th ACM international conference on Multimodal interaction (pp. 201–208). ACM. https://doi.org/10.1145/2388676.2388716

Caronia, L., & Mortari, L. (2015). The agency of things: how spaces and artefacts organize the moral order of an intensive care unit. Social Semiotics, 25(4), 401–422. https://doi.org/10.1080/10350330.2015.1059576

Cekaite, A., & Mondada, L. (Eds.). (2021). Touch in social interaction: Touch, Language, and Body. Routledge.

Day, D., & Wagner, J. (2014). Objects as tools for talk. In M. Nevile, P. Haddington, T. Heinemann, & M. Rauniomaa (Eds.), Interacting with objects: Language, materiality, and social activity (pp. 101–124). John Benjamins Publishing Company.

Erofeeva, M. (2019). On multiple agencies: when do things matter? Information, Communication & Society, 22(5), 590–604. https://doi.org/10.1080/1369118X.2019.1566486

Erofeeva, M., & Klowait, N. O. (2021). The Impact of Virtual Reality, Augmented Reality, and Interactive Whiteboards on the Attention Management in Secondary School STEM Teaching. In 2021 7th International Conference of the Immersive Learning Research Network (iLRN).

Fox, B. A., & Heinemann, T. (2015). The Alignment of Manual and Verbal Displays in Requests for the Repair of an Object. Research on Language and Social Interaction, 48(3), 342–362. https://doi.org/10.1080/08351813.2015.1058608

Garland-Thomson, R. (1997). Exceptional bodies: Figuring physical disability in American literature and culture. Columbia University Press.

Goffman, E. (1981). Forms of talk. University of Pennsylvania Press.

Goodwin, C. (1981). Conversational organization: Interaction between speakers and hearers. Academic Press.

Goodwin, C. (1995). Co-Constructing Meaning in Conversations With an Aphasie Man. Research on Language and Social Interaction, 28(3), 233–260. https://doi.org/10.1207/s15327973rlsi2803_4

Goodwin, C. (2000). Action and embodiment within situated human interaction. Journal of Pragmatics, 32(10), 1489–1522. https://doi.org/10.1016/S0378-2166(99)00096-X

Goodwin, C. (2018). Co-operative action. Learning in doing. Cambridge University Press.

Goodwin, M. H. (2017). Haptic Sociality: The Embodied Interactive Constitution of Intimacy through Touch. In C. Meyer, J. Streeck, & J. S. Jordan (Eds.), Intercorporeality Emerging Socialities in Interaction (pp. 73–102). Oxford University Press.

Goodwin, M. H., & Cekaite, A. (2019). Embodied family choreography: Practices of control, care, and mundane creativity. Routledge.

Haddington, P., & Oittinen, T. (2022). Interactional spaces in stationary, mobile, video-mediated and virtual encounters. In A. H. Jucker & H. Hausendorf (Eds.), Pragmatics of Space (pp. 317–362). De Gruyter. https://doi.org/10.1515/9783110693713-011

(n.d). Helping Hands mission statement. https://docs.google.com/document/d/1KFTiPRlER_Lf0aIbqfHDOWGqsl9aoyY2DGzeoqz5zD0/edit

Kendon, A. (2009). Conducting interaction: Patterns of behavior in focused encounters. Cambridge University Press.

Klowait N. O. (2023). On the Multimodal Resolution of a Search Sequence in Virtual Reality. Human Behavior and Emerging Technologies, article ID 8417012. https://doi.org/10.1155/2023/8417012

Latour, B. (2005). Reassembling the social: An introduction to actor-network-theory. Clarendon lectures in management studies. Oxford University Press.

Licoppe, C., Luff, P. K., Heath, C., Kuzuoka, H., Yamashita, N., & Tuncer, S. (2017). Showing Objects. In G. Mark, S. Fussell, C. Lampe, m. schraefel, J. P. Hourcade, C. Appert, & D. Wigdor (Eds.), Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (pp. 5295–5306). ACM. https://doi.org/10.1145/3025453.3025848

Lindström, J. K., Norrby, C., Wide, C., & Nilsson, J. (2017). Intersubjectivity at the counter: Artefacts and multimodal interaction in theatre box office encounters. Journal of Pragmatics, 108, 81–97. https://doi.org/10.1016/j.pragma.2016.11.009

Luff, P., Heath, C., Kuzuoka, H., Hindmarsh, J., Yamazaki, K., & Oyama, S. (2003). Fractured Ecologies: Creating Environments for Collaboration. Human–Computer Interaction, 18(1-2), 51–84. https://doi.org/10.1207/S15327051HCI1812_3

Merlino, S., Mondada, L., & Söderström, O. (2022). Walking through the city soundscape: an audio-visual analysis of sensory experience for people with psychosis. Visual Communication, 22(1): 71-95. https://doi.org/10.1177/14703572211052638

Mondada, L. (2001). Conventions for multimodal transcription. https://www.lorenzamondada.net/multimodal-transcription

Mondada, L. (2013). Interactional space and the study of embodied talk-in-interaction. In P. Auer, M. Hilpert, A. Stukenbrock, & B. Szmrecsanyi (Eds.), Space in Language and Linguistics (pp. 247–275). De Gruyter. https://doi.org/10.1515/9783110312027.247

Mondada, L. (2016). Challenges of multimodality: Language and the body in social interaction. Journal of Sociolinguistics, 20(3), 336–366. https://doi.org/10.1111/josl.1_12177

Mondada, L. (2019). Contemporary issues in conversation analysis: Embodiment and materiality, multimodality and multisensoriality in social interaction. Journal of Pragmatics, 145, 47–62. https://doi.org/10.1016/j.pragma.2019.01.016

Nevile, M. (2015). The Embodied Turn in Research on Language and Social Interaction. Research on Language and Social Interaction, 48(2), 121–151. https://doi.org/10.1080/08351813.2015.1025499

Nevile, M., Haddington, P., Heinemann, T., & Rauniomaa, M. (Eds.). (2014). Interacting with objects: Language, materiality, and social activity. John Benjamins Publishing Company.

Raudaskoski, P. (2020). Participant status through touch-in-interaction in a residential home for people with acquired brain injury. Social Interaction. Video-Based Studies of Human Sociality, 3(1). https://doi.org/10.7146/si.v3i1.120269

Seuren, L. M., Wherton, J., Greenhalgh, T., & Shaw, S. E. (2021). Whose turn is it anyway? Latency and the organization of turn-taking in video-mediated interaction. Journal of Pragmatics, 172, 63–78. https://doi.org/10.1016/j.pragma.2020.11.005

Wilkinson, R., Rae, J. P., & Rasmussen, G. (2020). Atypical Interaction. Springer International Publishing. https://doi.org/10.1007/978-3-030-28799-3

Zhao, S. (2005). The Digital Self: Through the Looking Glass of Telecopresent Others. Symbolic Interaction, 28(3), 387–405. https://doi.org/10.1525/si.2005.28.3.387

1 A Russian translation of an earlier version of the paper was published in: Erofeeva M. A., Klowait N., Zababurin D. R. (2022) How to Speak Silently–Rethinking Materiality, Agency, and Communicative Competence in Virtual Reality. Sociology of Power, 34(3-4): 156-181. DOI: https://doi.org/10.22394/2074-0492-2022-4-156-181 A Russian translation of an earlier version of the paper was published in: Erofeeva M. A., Klowait N., Zababurin D. R. (2022) How to Speak Silently–Rethinking Materiality, Agency, and Communicative Competence in Virtual Reality. Sociology of Power, 34(3-4): 156-181. DOI: https://doi.org/10.22394/2074-0492-2022-4-156-181↩

2 One of the prominent representatives of the material turn, Bruno Latour, who made a significant contribution to the conceptualization of the agency of things (Erofeeva, 2019), points out that the agency model, in which the greater the influence of the context, the less people act, is untenable, as it does not allow one to see the joint action of people with things, since the latter are always considered to be adversaries (Latour, 2005).↩

3 The transcript 1 uses a simplified Mondada-style (2001) convention. Since the analyzed actions have a pronounced sequential, rather than coincidental, quality, timelines are referenced whenever they have analytic bearing on the interaction (lines 1, 4, 13). In all other cases, the timing of the airpen drawing is provided in brackets. The in-text drawings are positioned at the point of their completion.↩

4 In transcripts 2 and 3, we employ a system developed by Goodwin (1981)for tracking gaze indicated on the lines below the embodied conduct. A series of dots denotes that one party is turning towards another, and a continuous line indicates that the participant is looking in the direction of the co-participant. In this case, the dashed line indicates that the participant is gazing at the writing space. One of the features of virtual reality is that gaze can be approximated by monitoring the movement of the head, not the eyes, because eye movements are not tracked by the system. This peculiarity also makes it more difficult to track the exact gaze direction; it is therefore an approximation, both for the analysts and the participants.↩

5 As mentioned by a reviewer of an earlier version of this paper, it is an open question whether a normate in VR is meaningfully equivalent to a normate in the physical world. It can be argued that VR is a polynormate space where communities are enabled to furnish their world and is thereby an interactional ecology, to the point where traditionally disadvantaged groups gain the status of 'localized normate'.↩