Social Interaction.

Video-Based Studies of Human Sociality.

Social Interaction.

Video-Based Studies of Human Sociality.

2019 Vol. 2, Issue 1

ISBN: 2446-3620

DOI: 10.7146/si.v2i1.110409

Social Interaction

Video-Based Studies of Human Sociality

Inhabiting spatial video and audio data: Towards a scenographic turn in the analysis of social interaction

Paul McIlvenny, Aalborg University[i]

Consumer versions of the passive 360° and stereoscopic omni-directional camera have recently come to market, generating new possibilities for qualitative video data collection. This paper discusses some of the methodological issues raised by collecting, manipulating and analysing complex video data recorded with 360° cameras and ambisonic microphones. It also reports on the development of a simple, yet powerful prototype to support focused engagement with such 360° recordings of a scene. The paper proposes that we ‘inhabit’ video through a tangible interface in virtual reality (VR) in order to explore complex spatial video and audio recordings of a single scene in which social interaction took place. The prototype is a software package called AVA360VR (‘Annotate, Visualise, Analyse 360° video in VR’). The paper is illustrated through a number of video clips, including a composite video of raw and semi-processed multi-cam recordings, a 360° video with spatial audio, a video comprising a sequence of static 360° screenshots of the AVA360VR interface, and a video comprising several screen capture clips of actual use of the tool. The paper discusses the prototype’s development and its analytical possibilities when inhabiting spatial video and audio footage as a complementary mode of re-presenting, engaging with, sharing and collaborating on interactional video data.

Keywords: 360° camera, Spatial audio, Evidential adequacy, Visualisation, Inhabited video, Virtual reality, Social interaction, Conversation analysis

1. Introduction

As analysts who collect and analyse qualitative video data, we are often stuck in a 2D planar representation of the social and physical world, framed by the consumer video camera and its in-built stereo microphone. Recently, consumer versions of the passive 360° and stereoscopic omni-directional camera have come to market, with spatial audio as a tricky supplement, which may facilitate the feeling of immersion when viewing a recorded scene. The present paper discusses some methodological issues raised by collecting, manipulating and analysing complex video data recorded with 360° cameras and ambisonic microphones from a conversation analytical perspective. It is becoming clear that we need a turn towards a more scenographic imagination – for instance, drawing from immersive theatre and gaming – to discover and multiply an innovative apparatus of observation with fresh ways to ‘sense-with-a-camera’.

Drawing upon a ‘scenographic turn’, this paper also reports on the development of a simple, yet powerful prototype to support practical, tangible and immersive engagement with 360° recordings of a scene in which social interaction took place. In terms of workflow, the prototype was iteratively developed locally on the basis of our extensive experience of working with qualitative video data, from data collection to publication. The prototype is designed to support the early stages of analysis when working closely with complex video and audio recordings that include 360° footage and spatial audio. It is not aimed at transcription per se; instead, it supports the analyst’s preliminary forays into the raw video footage, both before and after a transcript has been prepared. The paper seeks to demonstrate that virtual reality (VR) technology can be used to sustain a credible sense of immersion in the recorded scene and a tangible interaction with 360° and 2D video, and that these two features enhance the early stages of qualitative analysis. The prototype that has been developed is a software package provisionally called AVA360VR, which is an acronym for ‘Annotate, Visualise, Analyse 360° video in Virtual Reality’.

The paper is illustrated with a number of video clips in different formats. First, there is an embedded composite video of raw and semi-processed multi-cam recordings made during a naturally occurring camerawork training session for a team preparing to record naturalistic video data in the wild. Second, readers can view a prepared 360° video clip with spatial audio from a particular point-of-view in the scene. Third, there is an embedded video comprising a sequence of static 360° screenshots of AVA360VR’s basic functions. Fourth, there is a video recording of an early live demonstration of a ‘proof of concept’ prototype. The demonstration shows two analysts working on the camerawork training session data, simulating collaboration at a distance. Fifth, there is an embedded video comprising several screen capture clips of actual use of the tool, demonstrating some of its functionality (alpha version in January 2018). Furthermore, two cases are discussed in which the prototype was used to efficiently construct a preliminary visual argument for the interactional accomplishment of the phenomena in question. All the videos except the first clip use footage or screenshots captured from live sessions in the head-mounted display (HMD), in which an analyst using AVA360VR ‘inhabits’ the same video data as shown in the first clip.

2. Collecting video data with new camera technologies

If we pass over the history of scientific cinema from the late-nineteenth century onwards and skip forwards to the invention of video recording technology, screen-based display devices and the consumer video camera, we find that researchers have developed particular ways of working with video. I wish to address a few common assumptions that have been made regarding video data collection. These assumptions are challenged by new technologies, such as stereoscopic 360° cameras and ambisonic microphones as well as by novel data collection methods. Note that the assumptions listed here are not usually stated explicitly.

First, it is often assumed when recording that one is closer to the action if one uses a ‘natural’ camera viewpoint, namely a single 2D camera recording from a neutral position in the scene. This is indicative of a naively realist stance that relies on the cinematographic genres of truth and subjectivity that have become naturalised in the past 130 years of moving images. An uneasy equivalence is made between technological mediation and perception, which belies the constitutive relationships between the apparatus of observation and the object of attention (Barad, 2001). For example, modern recording gadgets often render opaque the design decisions that are made and default settings that are used in the recording of generic scenes. Although there is a general trend for researchers to use more cameras and microphones when circumstances dictate (Heath, Hindmarsh, & Luff, 2010), this is not yet common practice. And even so, it is often assumed in practice that the ‘natural’ field of view of any camera (and microphone) is that of a neutral subject in the scene, which means that the camera is positioned at head-height at a non-intrusive distance from the interactants. But cameras and microphones are neither eyes nor ears. Camera footage is not at all what people normally see and hear at the location of the device. Thus, why not move the camera(s) into the (inter)action or raise it/them above or below eye level (as we shall see in the embedded video clips later in the paper)?

Second, even if we assume for the moment that a single camera faithfully records the scene, one cannot claim that the analyst who replays and closely examines the recordings of one camera and one microphone is doing so from a participants’ perspective. I will steer clear of the etic/emic debate that tends to dichotomise and reify our epistemological access to the social and the cultural (Headland, Pike, & Harris, 1990; Jardine, 2004; Markee, 2013; Pike, 1967), but as Hirschauer (2006) has profoundly questioned, analysis is not a members’ practice in the scene, and although recordings are powerful analytical resources, they tend to be used to manufacture an ‘original’ self-identical conversation for analytical purposes. The lived work of making sense is not capturable in this way, frozen on the video record, from which the analyst simply extracts it in vivo. It is a pervasive tension in all approaches to registering or fixing the phenomenal trace of conduct, including with 360° cameras.

Third, it may be argued that increasing the number of recording devices is not a participants’ perspective, since participants do not routinely have access to multiple points-of-view. This assumes that participants have only one point-of-view in the course of social interaction and that this is knowable, when in fact, perceptually, members continually adjust perspective and point-of-view. One could not see, hear and navigate the three-dimensional world if one’s head and body were locked in a rigid position for the duration of an encounter. Tiny micro-movements of the head and body are part and parcel of our normal perceptual acuity. Therefore, why should analysts restrict themselves to just one camera or microphone position? It may, in fact, be the case that proliferating the recording devices leads not to an idealised panoptic or oligoptic overview of the scene, divorced from the ordinary practices that constitute the order of the scene – though that danger does, of course, exist, as Livingstone (1987) demonstrates – but leads instead to a position from which the analyst has the means to challenge the veracity of the privileged perspective promoted by the ‘one neutral camera is enough in practice’ stance. With one camera, one cannot see otherwise, and with one microphone, one cannot hear otherwise (see Modaff & Modaff, 2000 for an example of issues that arise if two microphones are used). My experience has been that recording video and audio data with many devices requires us to be more circumspect about this data and its evidential adequacy, prompting us to cast a sceptical eye and ear over our legacy data as well. I contend that a notional point-of-view anchored in a single apparatus becomes fragmented when the apparatus is proliferated.

Things get complicated when we consider new camera technologies such as stereoscopic 360°. If we look ahead for a moment and take a cursory viewing of the composite footage in Clip 1 in Section 5, we see that the 360° cameras used to collect the data offer us many views through different lenses at the same point of recording. Which lens provides a more ‘natural’ viewpoint? Is it a problem that the analyst has potentially more (ethnographic) resources than any one participant to determine relevant action or peripheral actors in the scene, simply because an analyst can look in all directions after the event, though only from the point-of-view of the 360° camera rig? Moreover, the stitching of the footage from each lens into the one equirectangular 360° video shown in the clip is not easy to decipher on the 2D screen, given that this format distorts the image. How should analysts view this new format in ways that enhance the possibilities for analysis? The clip also shows that many video and audio recordings were taken at different spatial locations. These enable the analyst to review social action and embodiment from different perspectives, but how should these be assembled in a coherent manner for the analyst? These are some of the issues that the AVA360VR prototype is designed to address.

3. Manipulating and annotating video data

With the digital age, video has moved onto the computer, drawing upon the virtual metaphor of the desktop non-linear editor based on a timeline and fragments of digital footage. Video footage can be played back at different speeds, rewound, played again, zoomed and frozen as part and parcel of editing practices, as Laurier & Brown (2011, 2014) and Laurier, Strebel, & Brown (2008) have shown in their study of professional video editors. Ultimately, we have not progressed much from these long-standing tools of manipulation. We do have better resolution, faster frame rates, a more stable and colour-calibrated image, and higher-resolution stereo audio channels. Nevertheless, the video is viewed repeatedly on a 2D screen within the field of view, depth of field, focus, etc. that was registered in a rather technically complex manner on the sensor through the camera’s optical lens mediated by the camera’s viewfinder.

Once one has assembled a corpus of video recordings, annotations (or coding) of the video can be made in a text file (script-based or score-based) that is synced to the video, making playback of the relevant segment relatively intuitive. Crucially, this keeps the researcher tethered to the video in a reflexive practice of listening and hearing, seeing and viewing, that constitutes the work of transcription and analysis (Ashmore & Reed, 2000). Nevertheless, an underlying goal of popular qualitative software tools such as ANVIL, CLAN, ELAN, FOLKER and TRANSANA (Kipp, 2010; MacWhinney & Wagner, 2010; Schmidt, 2016; Woods & Dempster, 2011) is to quickly abstract from the video and treat the textual annotations as ciphers for further computational processing. This is a powerful technique that facilitates additional quantitative analysis and qualitative data management typically found in CAQDAS systems. However, this has its disadvantages as well. For example, the video becomes both a distant static object and just a proxy for the event in order to resolve ambiguities. Working with the video is like watching TV with a VCR; not much has changed. Other tools have better visualisations of multi-stream data sets, but they remain within the monoscopic 2D screen of the desktop computer (Crabtree, Tennent, Brundell, & Knight, 2015; Fouse, Weibel, Hutchins, & Hollan, 2011).

Are we missing something? How can we cope with new technologies of capture such as 360° cameras and spatial audio? And can we develop better tools that enhance and even transcend traditional analytical practices? Augmented telestrators are popular in television production, for example, for drawing annotations on the screen in live-action broadcasts such as sports commentary, but they have not been widely used in qualitative research. There has been some exploration of alternative annotation interfaces for distributed data sessions (Fraser et al., 2006) and studies of unusual configurations of shared screens used by other disciplines for analysis (Luff et al., 2013). Others have tried to combine the collection of rich video data (with an early low frame-rate 360° camera) with automatic recognition of relevant features (Keskinarkaus et al., 2016). The present paper builds upon this innovative work by seeking to provide some new solutions that may have unexpected methodological benefits.

4. Big video and the scenographic turn

Over the past few years, a small group of colleagues has been experimenting with new camera and microphone technologies (Davidsen & McIlvenny, 2016; McIlvenny & Davidsen, 2017). Bearing in mind the complexity of the recording scenarios, and the increasing use of computational tools and resources, we position ourselves within what we call the Big Video paradigm. This term is promoted to counter the hype about quantitative big data analytics. The drive here is to instead develop an enhanced infrastructure for qualitative video analysis, with innovation in four key areas: 1) capture, storage, archiving and access of digital video; 2) visualisation, transformation and presentation; 3) collaboration and sharing; and 4) qualitative tools to support analysis. Big Video involves dealing with complex human data capture scenarios, large databases and distributed storage, computationally intensive virtualisation and the visualisation of concurrent data streams, and distributed collaboration. For more on the guiding principles of Big Video, read the Big Video Manifesto (McIlvenny & Davidsen, 2017).

Within Big Video, this paper contends that we are on the cusp of a transformation in the paradigm and accompanying metaphors upon which video-based research on social interaction draws. Up until recently, cinema and film have provided a dominant cinematographic imagination for how to ‘see-with-a-camera’. Traditional recording technology and the metaphor of the ‘camera’ and the ‘frame’ have played formative and constitutive roles in shaping our understanding of the world, including talk and social interaction. It is nevertheless becoming clear that there has been a turn towards a more scenographic imagination, often drawing from immersive theatre and gaming, to discover and multiply an innovative apparatus of observation with fresh ways to ‘sense-with-a-camera’. McKinney & Palmer (2017) locate the recent turn towards expanded scenography in performance studies in terms of a reconsideration of the performative, the material, the embodied and the spatial in sites outside traditional performance spaces like the theatre. Drawing upon a scenographic metaphor, I refer to inhabited video, which facilitates exploring complex spatial video and audio recordings of a single scene in which social interaction took place through a tangible interface in virtual reality.[1] Inhabited video is complementary to staging video, which is reconstructing the site and the scenes in which social interactions took place over time in an interactive and immersive 3D representation or model in virtual or augmented reality. In the analytical workflow, staging can be seen as antecedent to inhabiting video. This paper reports on the development of a prototype to support inhabiting video through tangible and immersive engagement with recordings of a scene in which social interaction took place.[2]

5. Data example

Before looking at the prototype itself, an introduction is necessary to the kind of video data for which the tool is designed. Examples are drawn from a small corpus of 360° video and audio recordings made in a training and instructional setting in which talk takes place. The footage was recorded during a real camerawork training session for a research team preparing to record naturalistic data in the wild. The praxeology of camerawork is worthy of study, as Mondada (2014) and (Mengis, Nicolini, & Gorli, 2018) have demonstrated for 2D video camera technology, but it is not the primary focus of this paper. In the scene that will be used in this paper, the team has been instructed to move together and to film the interaction between a nature guide and an imaginary nature tour group who are following the guide outside. The exercise seeks to assemble a mobile filming formation (McIlvenny, Broth, & Haddington, 2014) that best captures and registers the unfolding events for the purposes of analysis.

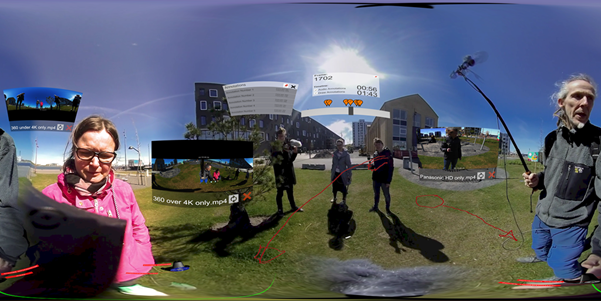

In the following embedded video clip, in traditional 2D format, a short excerpt (1:43 minutes) from the camerawork training session outside can be seen in which the raw and processed footage from the different cameras is composited in a single frame. The original composite file has 32 channels of audio so that one can switch between different microphones and combinations of microphones. This is indicated in the video clip but is not available on YouTube. All participants are playing particular roles in the training session, and each carries a camera. In preparation for the real data collection with a guide, Bethany is pretending to be a nature guide. She carries a GoPro camera on a gimbal. The recording team is comprised of Peter, Rachel, Christina and Timothy. Peter is the instructor, who is carrying a single lens 360° camera on a raised extension pole with a separate ambisonic microphone. Rachel and Timothy are filming with a prosumer camcorder and a single lens 360° camera on a lowered extension pole respectively. Christina is filming with a stereoscopic 360° camera and an independent ambisonic microphone on a monopod. In a nutshell, this is a typical team filming arrangement, in which the team needs to attentively yet silently coordinate their joint camerawork. Team members have used a similar arrangement since 2016.

In the scene, the participants approach a grassy area, and Bethany stops and informs the group about the flora present. Peter instructs Christina to move to a new position. After some further guiding by Bethany, Peter interrupts the role play to discuss the reasoning behind a particular configuration of cameras and microphones in this context in relation to wind noise, the virtual tour group and the relative positioning of each cameraperson. After some discussion, they move on to another location. Note that from the perspective of the participants, the guided tour is role play, but the camerawork exercise is a real training exercise for later data collection endeavours with these same team members. The video data was initially collected to facilitate recall and review of the footage.

For reference, and to provide a translation, a straightforward CA transcript is given in an appendix, using the standard Jeffersonian conventions. It focuses on talk (in Danish and English), social interaction and some of the gross actions in the scene. Some parts of this transcript will appear later in a screenshot and an embedded video clip. The innovative aspect of the AVA360VR prototype is that transcript segments can be inserted as a synchronised, interactive, virtual object in an immersive representation of the 360° video.

Besides the 2D composite video clip above, 360° video clips of the camerawork training session are also available online and are embedded in this paper, as are 2D clips captured from a live session in the head-mounted display in which the analyst ‘inhabits’ the video data in 360°. A variety of software to support stitching, synching and aligning stereoscopic and monoscopic passive 360° video with ambisonic sound recordings was used to prepare the video from the raw footage, sometimes in unconventional ways. You may notice some distortion along stitch lines in the image. Special software is usually required to view 360° videos, but online public video archives such as YouTube and Vimeo now provide the means to upload and embed such videos in a webpage if they are in the correct format. The 360° videos can be viewed in an appropriate browser on the desktop, e.g. Chrome or Firefox, but they also work well in a YouTube app on a smartphone while wearing headphones. If you have Google Cardboard or another head-mounted display in which you can place your smartphone, then you can use head-tracking to view the 360° videos and hear spatial audio on headphones. When viewing the videos embedded in this paper, it is best to select the highest resolution possible, e.g. 2160s (4K), from the settings option in the YouTube video window. The default resolution is much lower. Readers will be able to view the videos and use their mouse or finger to move their field-of-view in the scene and thus look where they wish. Moreover, if the reader wears headphones, then the sound in the 360° clips will be spatialised rather than head-locked, i.e. voices in the scene will seem to come from where they should, even if you turn around (this will not work with external speakers, only with stereo headphones). These properties of the video playback may offer more immersive engagement with the original video and audio recording or registration.

In the following embedded clip, the same camerawork training scene is illustrated by a 360° video, which is stitched together from the footage taken by a stereoscopic 360° camera with eight lenses.

The spatial audio is from an independent ambisonic microphone mounted below the camera on a monopod, which Christina is carrying. This footage is presented monoscopically, for the sake of ease of viewing on a 2D monitor.

As always, the issue with such complex video data in various formats, including 360°, is where to start and how to view it all so as to begin to explore the organisation of social interaction in an unmotivated fashion. The AVA360VR tool is designed primarily to support this. If one has simply recorded a single video with one GoPro or camcorder with an in-built microphone, then the tool offers nothing that a traditional video player and transcription software cannot adequately provide. Furthermore, if you wish to transcribe from a video or to use computational tools to analyse a database of transcripts, then there is more appropriate software for the job. This tool fits snugly between collecting rich video data and undertaking exploratory ‘hands-on’ analysis of a single scene, possibly with a rough transcript as a guide.

6. The AVA360VR prototype

Since 2016, developers have been working on consumer virtual reality (VR) environments to support collaboration and the editing of 3D models and animations. A credible sense of immersion in the scene and tangible interaction with the video can be sustained when using virtual reality technology today.[3] In order to build and interact with 3D worlds, two powerful software packages have been developed over the past ten years, namely Unity and Unreal. It may at first glance seem strange to use software developed for gaming, but these concerns dissipate if one thinks of the astute solutions they offer to the problems of 3D modelling, object manipulation, spatial audio, virtual cameras and interactivity. For AVA360VR, the Unity game engine was used to build the virtual reality environment in which the prepared 360° video could be viewed and annotated in a more intuitive way than on a 2D computer monitor. Preliminary testing of AVA360VR by scholars, some computer literate and others with only a basic competence, has been highly positive. In hardware terms, the project was developed with the HTC VIVE room-scale virtual reality head-mounted display and controllers, which were first released on the consumer market in spring 2016.

The fundamental idea of AVA360VR is to project a passive 360° video onto the surface of a sphere in 3D space and to track the researcher’s body, head and hand movements in the real world. With very little latency, the researcher sees the projected video as if one were inside the 360° video and could turn in any direction. With hand controllers, the video that the analyst views can be manipulated virtually and annotated spatially and temporally using markers, tags, drawings, graphical elements, animations and voice notes. Below, I seek to illustrate the functionality of the prototype and explain why it is best thought of as inhabiting video.

7. Inhabiting video for preliminary visual analysis

In the following embedded 2D video clip, which is a composite of four videos, we see an extract from the first public demonstration of an early prototype to a workshop group at the Big Video Sprint conference in November 2017.

Two researchers are consecutively annotating the same video clip from the team camerawork training session using the head-mounted display. The main video of the live screen capture shows what the analyst is seeing and doing, and the other three videos show different perspectives on the scene, i.e. one can see the analyst in the head-mounted display and the audience who is watching the live screen. Initially, the audio from inside the virtual reality experience is only heard locally through the headphones for its spatial effect. After a while, it is ported through speakers as well and becomes audible in the room. Unfortunately, the 2D remediation of the view-port of the head-mounted display does not give a strong sense of what it is like to wear the stereoscopic head-mounted display. Viewing in this manner is underwhelming and sometimes disorientating. It is quite a different and more immersive experience to actually try it oneself.

Building upon this early prototype, tools that can manipulate the video were added. For example, a visual timeline can be resized and moved around the 3D workspace. The ability to add voice annotations and draw on the video surface with a laser pointer were added. These annotations are marked with a selectable icon on the timeline. Annotations can be played back at a later date in the context of the video in which they were made. To help view the scene simultaneously from multiple camera angles, additional video footage can be imported and pinned to the current 360° video sphere. The video screens are resizable and can contain a mirrored or zoomed view of another part of the scene. Furthermore, typed transcripts can be brought into the 3D space as objects that trigger video playback, and vice versa. A workflow has been developed that exports a transcript with embedded frame numbers and a uniform tabbed layout, allowing one to then import this raw text transcript file into AVA360VR, retaining the layout while in virtual reality. Using other software, the transcript can be simultaneously edited live by others present, for example, in a data session.

In the next embedded 2D video clip, we see a live screen capture of the prototype being used to annotate the same camerawork training footage. This and the fifth clip were made in January 2018 with an alpha version of the prototype. The functions shown do not necessarily reflect those in the final prototype.

The captured video shows the monoscopic point-of-view of the analyst. At the top left of the frame is a stereoscopic representation of what the user sees in the headset, which is more faithful with regards to the actual field of view. The examples in the clip illustrate some of the basic functionality of AVA360VR. The functions shown are (a) scanning the field of view in 360°, (b) using the controllers, (c) using the timeline, (d) annotating, (e) inserting simultaneous video streams, (f) re-caming,[4] and (g) deploying interactive transcript objects. Many of these remediate familiar operations – such as pointing, grabbing, drawing and watching two monitors – but with added functionality because of their virtualisation.

To complement the live capture video, the fifth embedded video clip comprises a sequence of static 360° panoramic screenshots of the AVA360VR interface that enables the analyst to inhabit the video recorded in the camerawork training session shown in the first and second videos. The screenshots are high-resolution image captures of the complete field of view triggered live by the user inside the head-mounted display from whatever position they desire (and stored to disk). The screenshot image function and the live screen capture video function allow others not in virtual reality to view the results of the use of the AVA360VR tool at a later date. This also provides another form of documentation of the phenomena in question, as this paper demonstrates.

The screenshots play in sequence as a 360° video, so they can be paused at any time, and one can look around the scene by turning one’s head or using the mouse or one’s finger. Screenshots of a very early prototype and of the various functionalities of the tool in its current iteration are included.[5] The various tool menus are illustrated, as are the locations and names of the participants as well as the cameras and microphones they are operating. Another screenshot shows the positioning of video inserts into the space, and annotations to show the positions, movements and gestures of the participants in relation to the virtual tour group. AVA360VR makes it easy to insert multiple video windows into the user space and annotate key actions and movements on the 360° video.

Two of the screenshot sequences illustrate how AVA360VR can be used to elicit preliminary analyses of a complex scene recorded with one or more 360° cameras and to present a visual argument for the interactional accomplishment of the phenomena in question. A complete analysis of both cases is not presented here, since the goal in this paper is simply to show examples in which the tool precipitated and helped document an emerging analysis from its embodied use-in-development. In both cases, the visual arguments are reproduced in the two figures below, but they can only be fully appreciated when watched in a 360° viewer and interactively in AVA360VR.

The first case (Example 4 in the screenshot clip) shows how, while working with the video clips in the head-mounted display, it becomes obvious that the participants are mutually constituting a virtual space in front of the guide, around which a formation of imaginary tour group members were assembling in a side-by-side arrangement in order to see and hear the guide (see De Stefani & Mondada, 2014 for an extensive analysis of mobile formations of real guided parties). Much as Stukenbrock (2017) has shown in her analysis of the mutual enactment of “intercorporeal phantasms” in self-defence training for girls, the participants in the camerawork training exercise stage their actions in relation to the imagined bodies, spaces and movements of a cohort of virtual members. For example, Peter draws an arc with a pointing gesture (also indicated in line 47 in the transcript) to indicate the boundaries of the cohort, which is annotated in the first part of the screenshot sequence (see Figure 1 for an equirectangular representation of the 360° frame, with some of the annotations made using the tool). Unfortunately, the equirectangular format does not view well on the 2D page. The image is distorted, and the embedded text and inserted video screens are warped. When viewing the embedded video on YouTube, these anomalies disappear.

Bethany reiterates the arc with another gesture (lines 67-68, as drawn on the video in Figure 1) and asks about the spatial configuration of the cohort. Peter moves along the boundary of the cohort towards Christina and reinforces the imaginary boundary (lines 69-73, as drawn on the video in Figure 1). The point here is that it is very difficult to envisage the space in which conduct unfolds and which is accomplished by the participants’ interaction simply by viewing the 360° video footage on a 2D monitor (or by just looking at the footage from the 2D cameras).

A further screenshot sequence demonstrates a second case (Example 5 in the video clip) in which the tool facilitated the emergence of an alternative analysis. The issue was how one could clarify the coordination of mutual gaze by Peter and Christina that precedes Peter’s instruction gesture and Christina’s subsequent movement to a better filming position (also indicated in lines 17-28 in the transcript). At first it was assumed that Peter gestured, Christina noticed, and then she moved. However, as Goodwin (1981) has so eloquently argued, the coordination of gaze is often an accomplishment before, and a condition for, the accomplishment of an action. With 360° video and other complex video streams, this can be difficult to show without carefully syncing and editing the videos – which is time-consuming and requires skill with a non-linear editor – simply because one cannot look everywhere at the same time in a 360° viewer. With AVA360VR and the ‘rear mirror’ function, one can ‘re-cam’ any other part of the visual field, as though one were looking in a rear-view mirror to see what was happening behind you without turning around. Thus, one can zoom in to focus on the action in another part of the scene and display it synchronously alongside the visual action to which it corresponds. The evidential use of the rear-view mirror is illustrated in an edited screenshot sequence exported from the tool (see views A, B and C in Figure 2).

It shows four frames taken at different timecodes, demonstrating that first (A) Peter monitors Christina’s camerawork and position, but she is looking away; second (B) she turns her gaze towards him; third (C) he points to a new location (annotated live on the image); and fourth (D) she nods and begins to move towards that location (annotated live on the image). This indicates that she recognises the instructive nature of the pointing gesture.

In both cases, these tentative steps towards analysing the video by assembling visual evidence in 360° space only took a minute to create and save to disk in AVA360VR. Without this tool, it would only be possible to do the equivalent by careful playback of multiple, individual video files, by extracting multiple video frame stills, and by using image processing software to mock up each part of the sequence from the cropped frame stills. This would take most scholars hours of painstaking work.

8. Conclusion

One goal of this paper has been to give readers some insight into the reasoning behind the development of the AVA360VR prototype and some of its practical uses when analysing social interaction using 360° video footage. The tool is the result of two years of intensive development and practical innovation with new technologies and software. Of course, developing a tool such as this is driven by the needs of researchers in ethnomethodological conversation analysis to cope with new forms of data collection with unfamiliar technologies that may lead to enhanced methods for performing analysis in teams. Indeed, the tool is the result of direct collaboration between scholars fluent in ethnomethodological conversation analysis and software developers, rather than pandering to the interests of computational linguists or corpus analysts. Consequently, the tool has been iteratively developed to support some of the ways in which we work, or wish to work, on video data. The long-term goal is to enable researchers to analyse video in a more tactile, tangible and palpable fashion: to handle, re-perspectivise and share video in temporal and volumetric dimensions in the early stages of analysis. Moreover, AVA360VR can be used in data sessions, innovative conference venues and more adventurous publication formats. It can also serve as an archiving tool. The hope is that a refined prototype may have wider applicability to qualitative studies of video. Several developments and enhancements are in progress and planned in the future. For example, a richer annotation and animation interface is under development, as is a collaborative mode for two or more analysts to inhabit the 360° video together, with better tools for manipulating multi-cam video and generating novel interactive visualisations of the 360° video footage across time in virtual reality. A staging engine is being developed in parallel. The goal is to create alternative modes of presentation that can harness both the virtues of virtualisation and interactive ‘staging’ inherent in 3D gaming engines and the scenographic turn in performance art and theatre that informs the best examples of the emerging virtual reality documentary genre.

Whether such a prototype will prove useful in the long run is hard to determine. Some will think it overly technical and time-consuming to learn, with little analytical purchase. Others will see the spread of camera gadgets and computer technologies into the field as detracting from the real task, namely analysing social interaction recorded using only a single camera and/or audio recorder, which is perfectly adequate for any analytical claim to be made. Some may argue that tools such as AVA360VR invite the claim that one is in some way closer to the event or the recording, short-circuiting the transcript in the sense that the traditional transcript is no longer needed to document good analysis. This is not my claim, though I admit it is a seductive danger posed by supposedly more immersive technologies. Following the reservations of Ashmore and Reed (2000), it is important to reflect upon how claims that one can bypass the ‘biased’ intermediary ‘writing’/graphe of transcription through a more direct mediation of the event via transparent access to the ‘tape’ or the ‘record’ are fraught with problems, both ethical and methodological. This paper does not purport that it is possible for one to become an unmediated ‘witness’ of the event, and it does not contend that one can so easily escape issues of evidential adequacy, interpolation, parallax and perspectivation. These tensions, on the contrary, are simply folded down to the new apparatus of capture and presentation – in this case, 360° video and interactive 3D environments – which inevitably involve forms of ‘writing’ in the broad sense, but which now occupy expanded dimensions of space and time.

My firm belief is that a ‘scenographic turn’ and the development of practical digital tools to work with 360° video – and other streams of sensory data – in new ways will lead to small and unexpected methodological innovations, such as new forms of volumetric visual argumentation and analysis. Of course, one can legitimately complain that technical, temporal and financial overheads make this prohibitive for most scholars. These are important considerations, but costs will come down, and more importantly, we do need to learn how to work in more sophisticated ways with spatiality and volumetricity, with light and sound and other senses in space, and with embodiment and movement, in order to understand and analyse social conduct. It will be a steep learning curve for new generations of scholars.

9. Acknowledgements

I would like to thank the BigSoftVideo development team for the leaps and bounds we have made in the last few months of 2017. Jacob Davidsen has been the perfect colleague in our crazy Big Video endeavour, and student assistant Nicklas Haagh Christensen has been our devoted software developer. Without him there would be no implementation, just some crazy ideas. Thanks also to the team in the camerawork training session for taking part and giving their permission for the recordings to be used in this paper and uploaded to YouTube. Equipment for this research in 2017 was supported by the Danish Digital Humanities Lab infrastructure project.

10. Appendix: Transcript

|

|

|

11. References

De Stefani, E., & Mondada, L. (2014). Reorganizing mobile formations: When 'guided' participants initiate reorientations in guided tours. Space & Culture, 17(2), 157-175.